Protecting Privacy in Humanitarian Response

Project description

Governments and humanitarian organizations around the world are increasingly embracing cutting-edge technologies to strengthen crisis response and deliver aid. These innovations, while promising, often depend on the collection and use of individuals’ personal data, raising significant concerns about privacy and data protection. Advances in privacy-enhancing technologies, like differential privacy, offer potential solutions. However, differential privacy entails an inherent tradeoff: as privacy requirements become more stringent, the effectiveness of humanitarian applications can be reduced. This privacy-accuracy tradeoff can be mild and practical in some contexts, but, in others, it can become untenable.

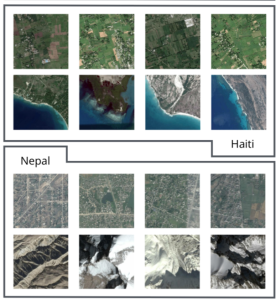

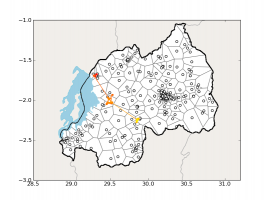

When the effectiveness of a humanitarian program hinges on population-level insights, differential privacy can strike a favorable balance between privacy and program effectiveness. In a series of case studies, we use personal mobility data from mobile phones in Afghanistan and Rwanda to create differentially private mobility matrices. These matrices introduce specific types of noise that make it mathematically infeasible to re-identify individual movement traces while still supporting effective decision-making. Empirical evidence from Afghanistan and Rwanda highlights the applicability of this approach in real-world scenarios, such as pandemic management and post-disaster aid delivery. Even under strict privacy safeguards, interventions maintained high levels of accuracy. Our work details both technical considerations and practical tradeoffs, equipping policymakers to responsibly deploy privacy-preserving mobility analytics without undermining humanitarian objectives.

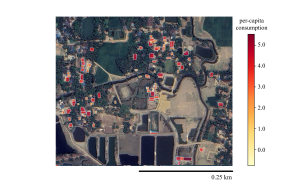

In contrast, programs that depend on individual-level learning (such as those targeting benefits or aid to specific people) face greater challenges with standard differential privacy, which is designed to protect individuals by supporting only aggregate, not individual, inferences. To address this gap, we introduced targeted differential privacy, a new framework tailored to scenarios where decisions hinge on the data of particular individuals. This approach allows humanitarian organizations to make algorithmic eligibility decisions for programs like anti-poverty cash transfers or loans while extending strong, customizable privacy protections at the individual level. Data from initiatives in Togo and Nigeria show that, while increased privacy does come with some reduction in predictive accuracy, in many cases meaningful privacy gains are possible with only moderate impact on effectiveness. These findings empower organizations to responsibly harness personal data for social good, while balancing the needs for privacy and impact in a transparent, quantifiable manner.

Related Publications

- Kahn, Zoe, Meyebinesso Farida Carelle Pere, Emily Aiken, Nitin Kohli, and Joshua E. Blumenstock. “Expanding Perspectives on Data Privacy: Insights from Rural Togo.” Proceedings of the ACM on Human-Computer Interaction 9, no. 2 (2025): 1-29. [pdf]

- Narayan, Sanjiv M., Nitin Kohli, and Megan M. Martin. “Addressing contemporary threats in anonymised healthcare data using privacy engineering.” Nature Digital Medicine 8, no. 1 (2025): 145. [pdf]

- Kohli, N, Aiken, E, and Blumenstock, JE (2024). Privacy Guarantees for Personal Mobility Data in Humanitarian Response, Nature Scientific Reports, 14: 28565 [pdf]

- Kohli, Nitin, and Paul Laskowski. “Differential privacy for black-box statistical analyses.” Proceedings on Privacy Enhancing Technologies (2023). [pdf]